With algorithms handling much of the bidding and targeting, ad creative testing is often where meaningful gains are made. The problem is that most testing approaches are random, fragmented, or too slow to drive consistent lift.

Let’s break down how to structure tests, set KPIs, build variations, and scale winning concepts. If you manage ad spend across Meta, TikTok, YouTube, or programmatic channels, this framework will help you test faster, learn smarter, and optimize creatives at scale.

Key takeaways

- As algorithms automate bidding and targeting, testing creative is where marketers win or lose.

- A testing framework beats random experimentation. It brings structure, repeatability, and better learning across campaigns and channels.

- Clear KPIs make creative testing actionable. Tie each test to business goals like CTR, CPA, or ROAS to guide decision-making.

- Start with big ideas, then optimize details. Test concepts first, then iterate on copy, visuals, and format once direction is validated.

- Avoid common pitfalls, like testing too many variables, misreading early signals, or letting fatigue skew results.

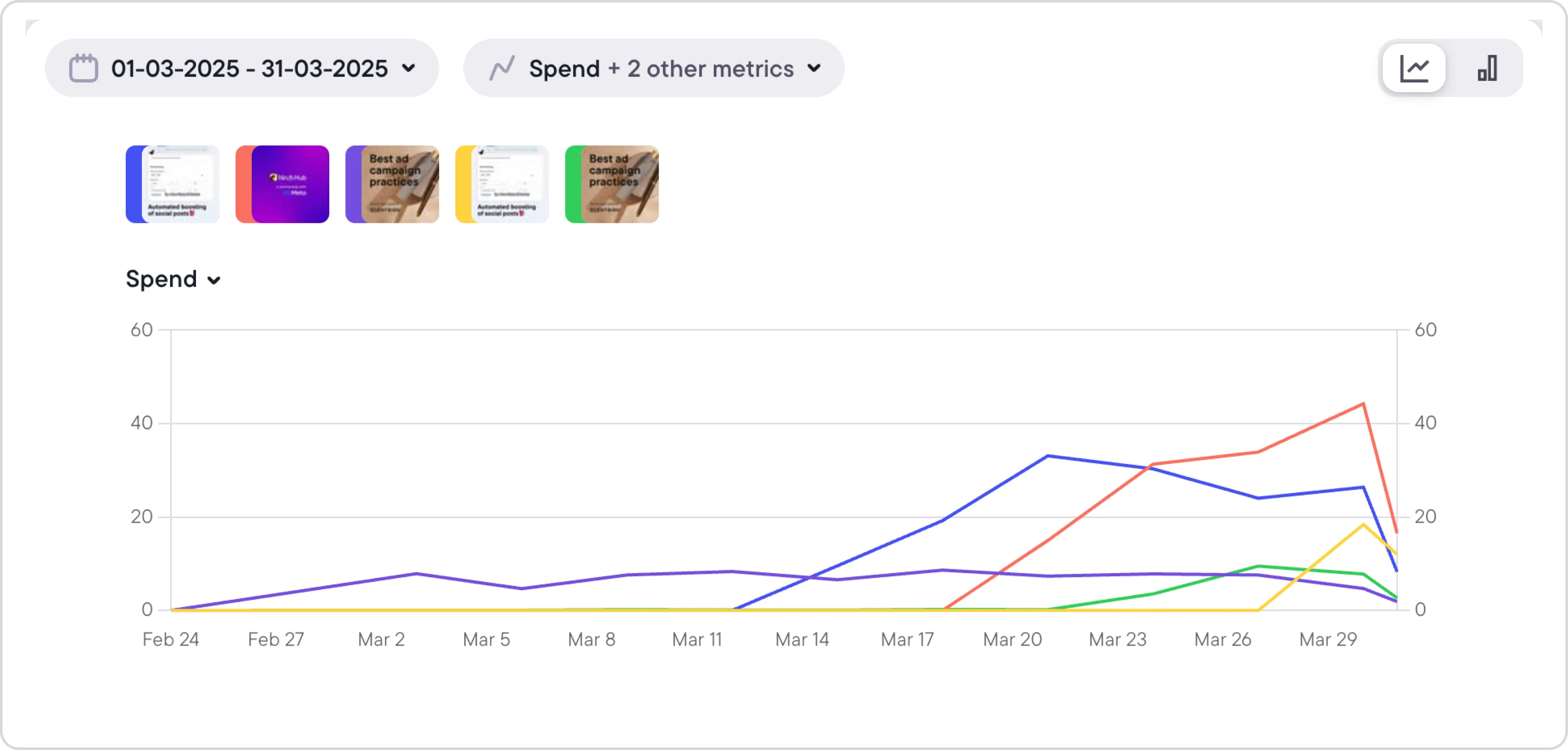

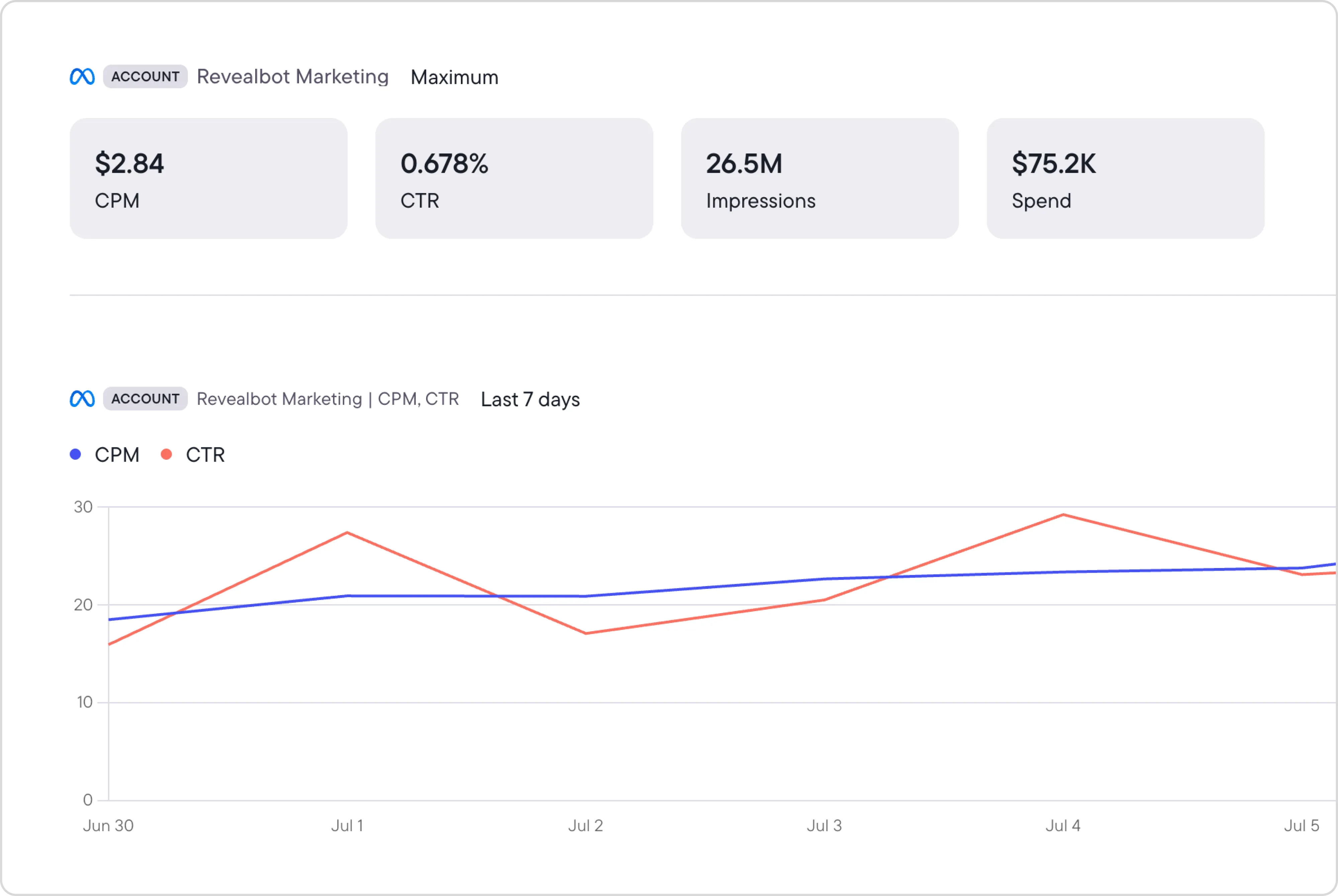

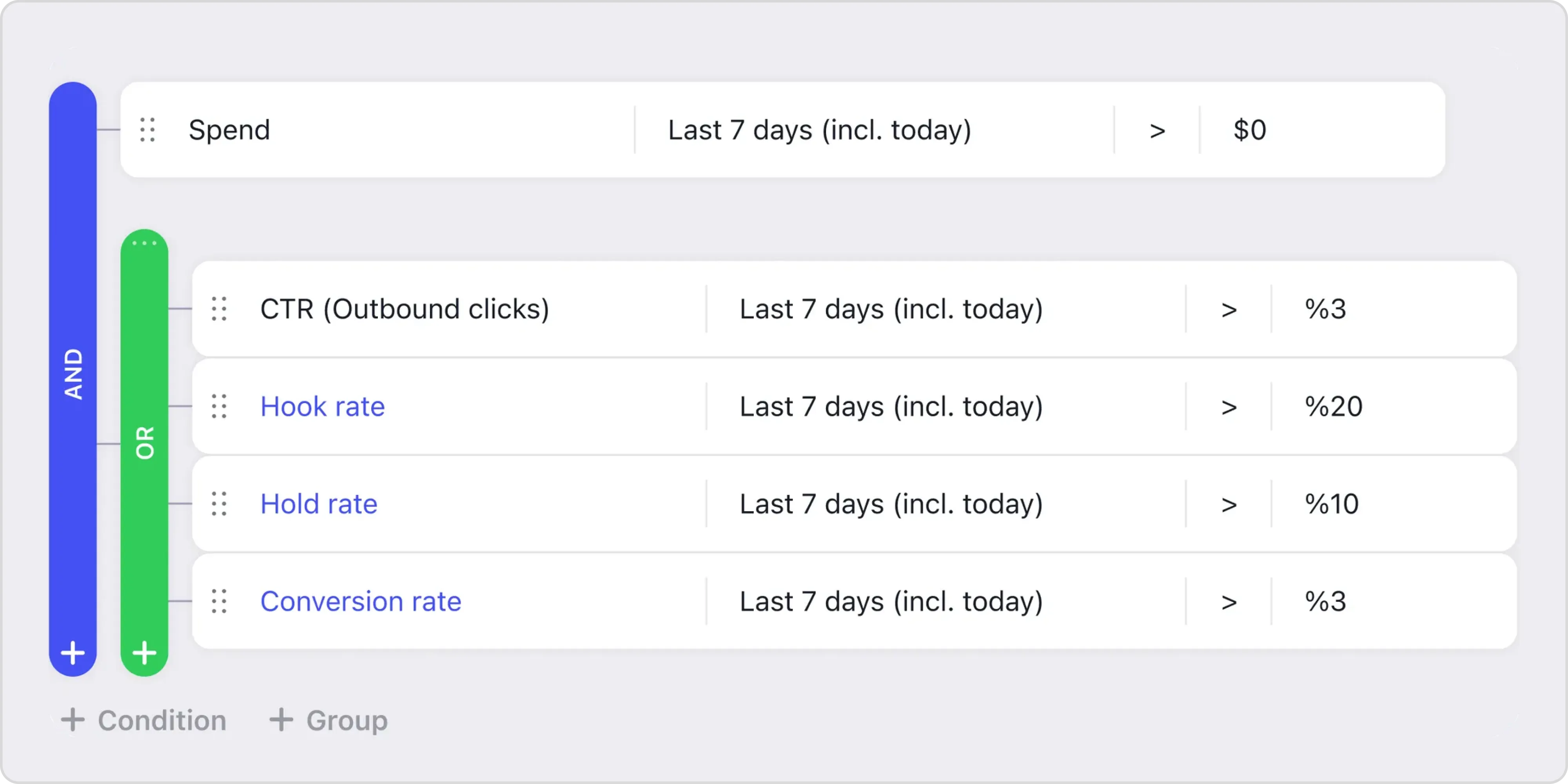

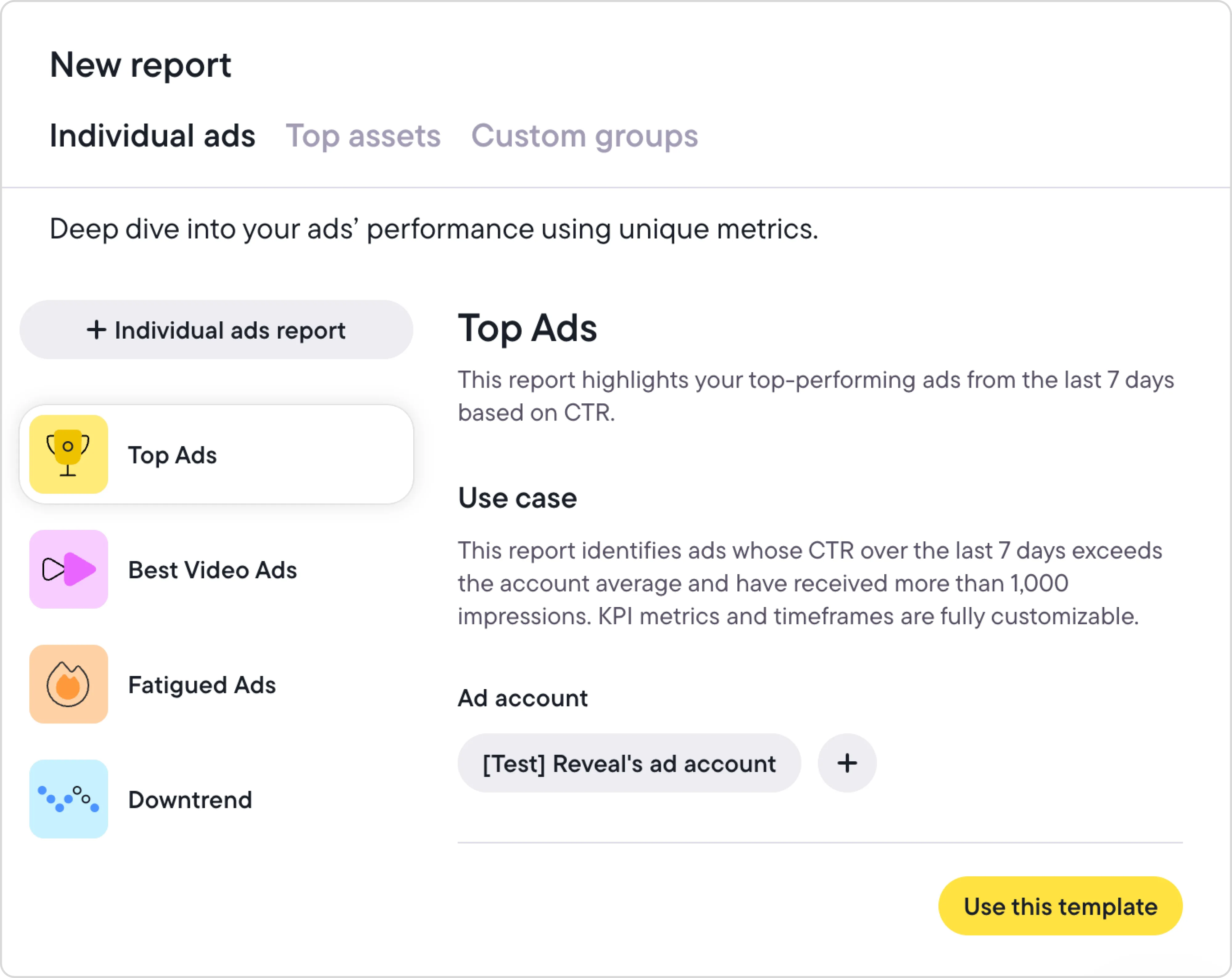

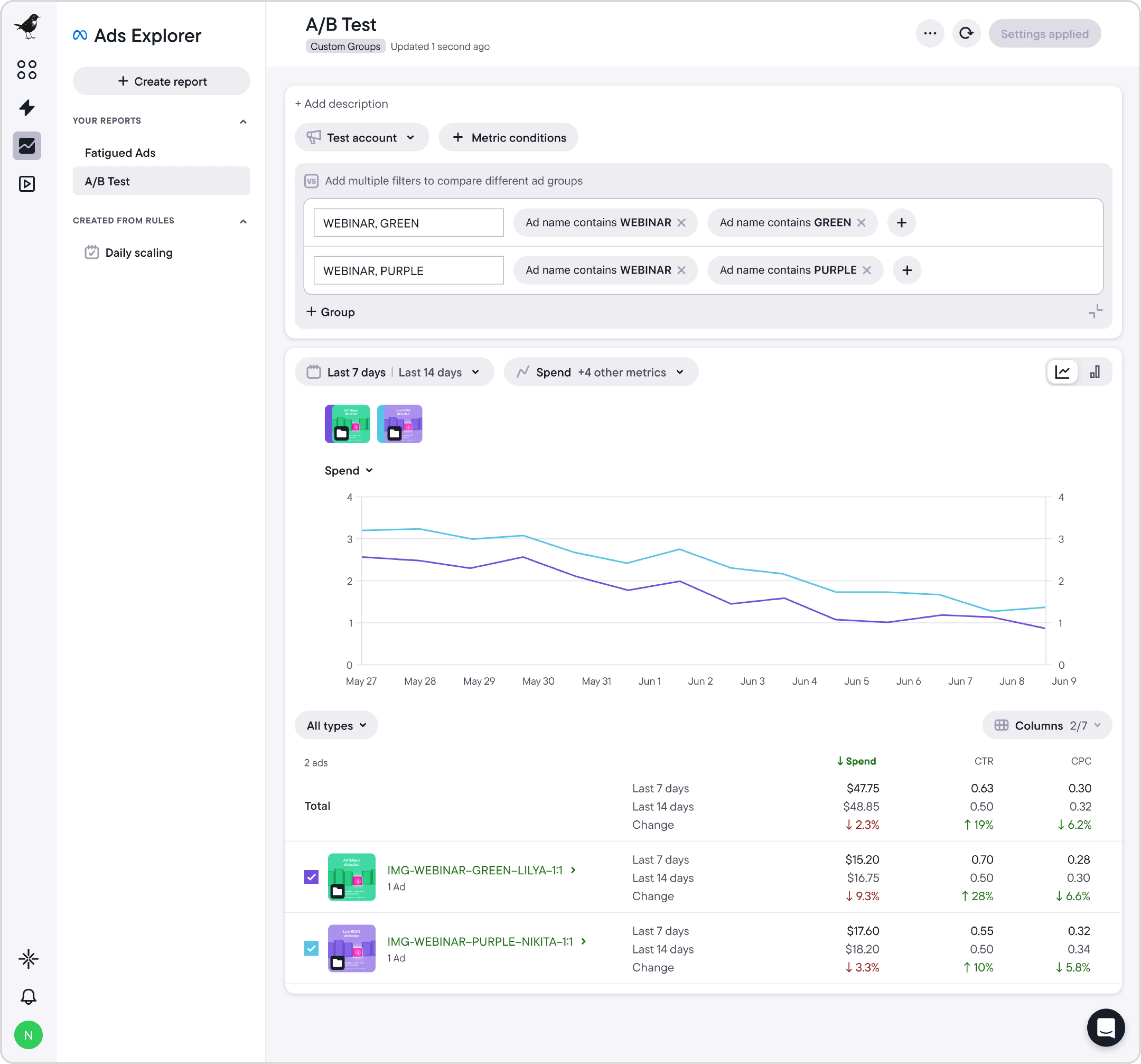

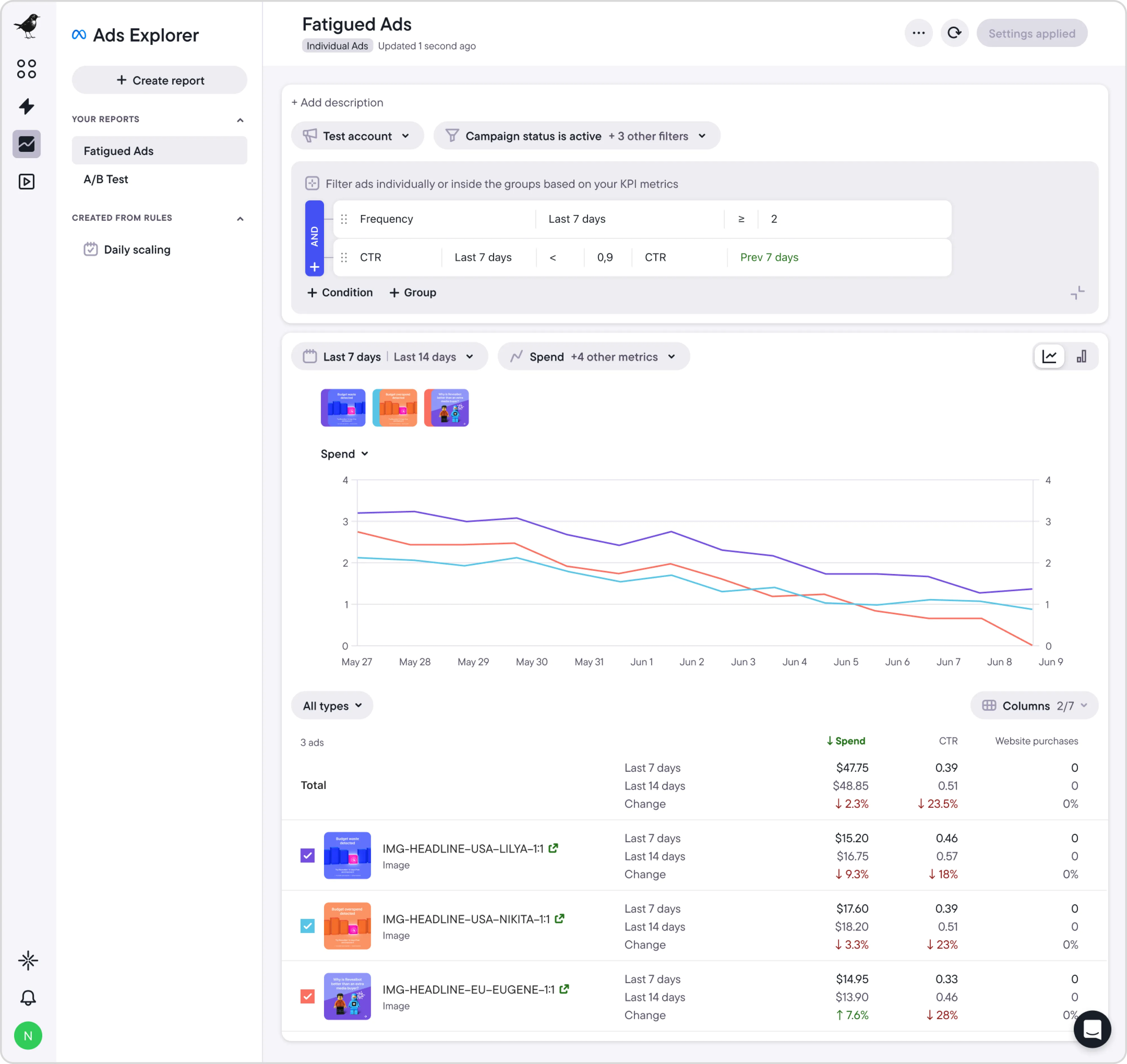

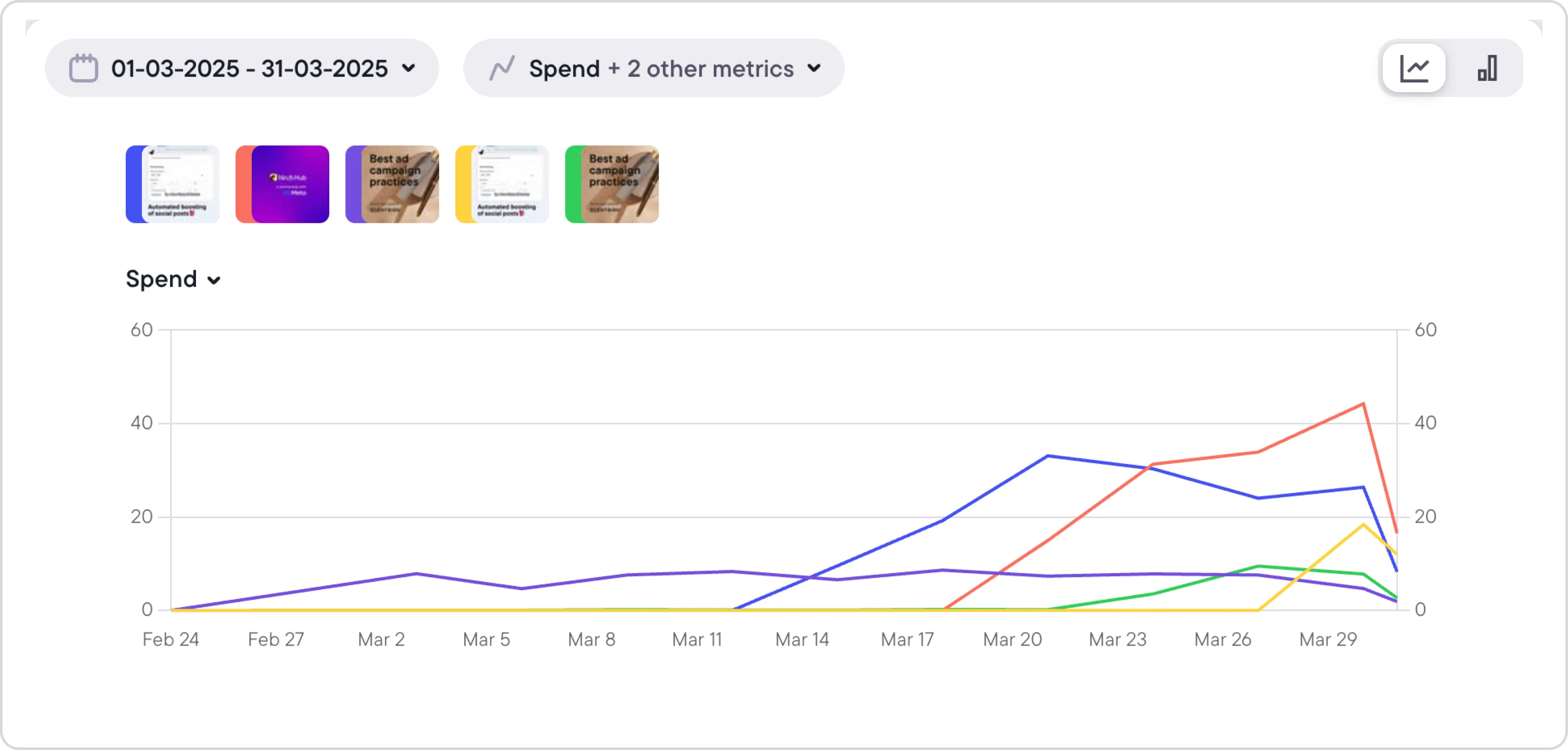

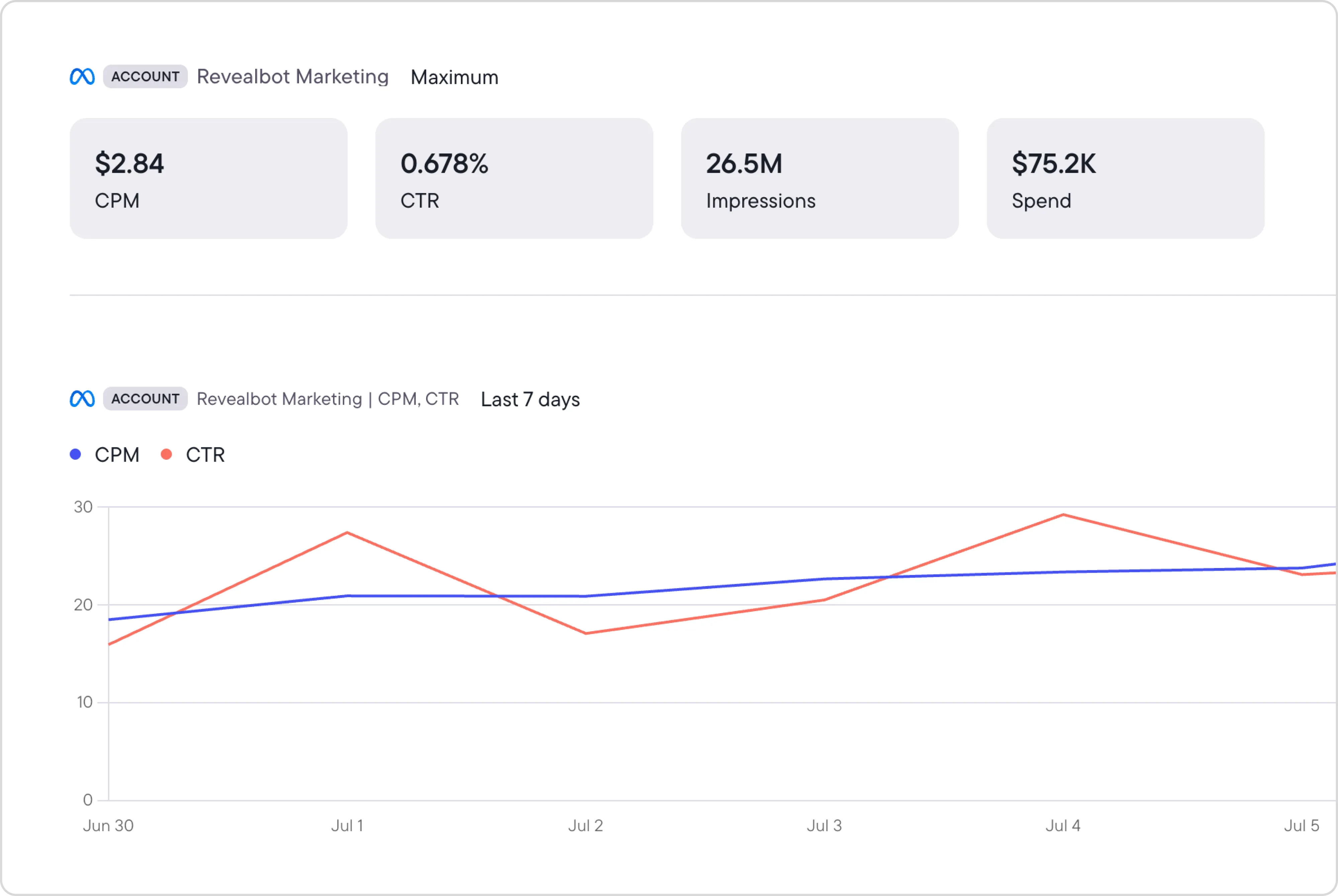

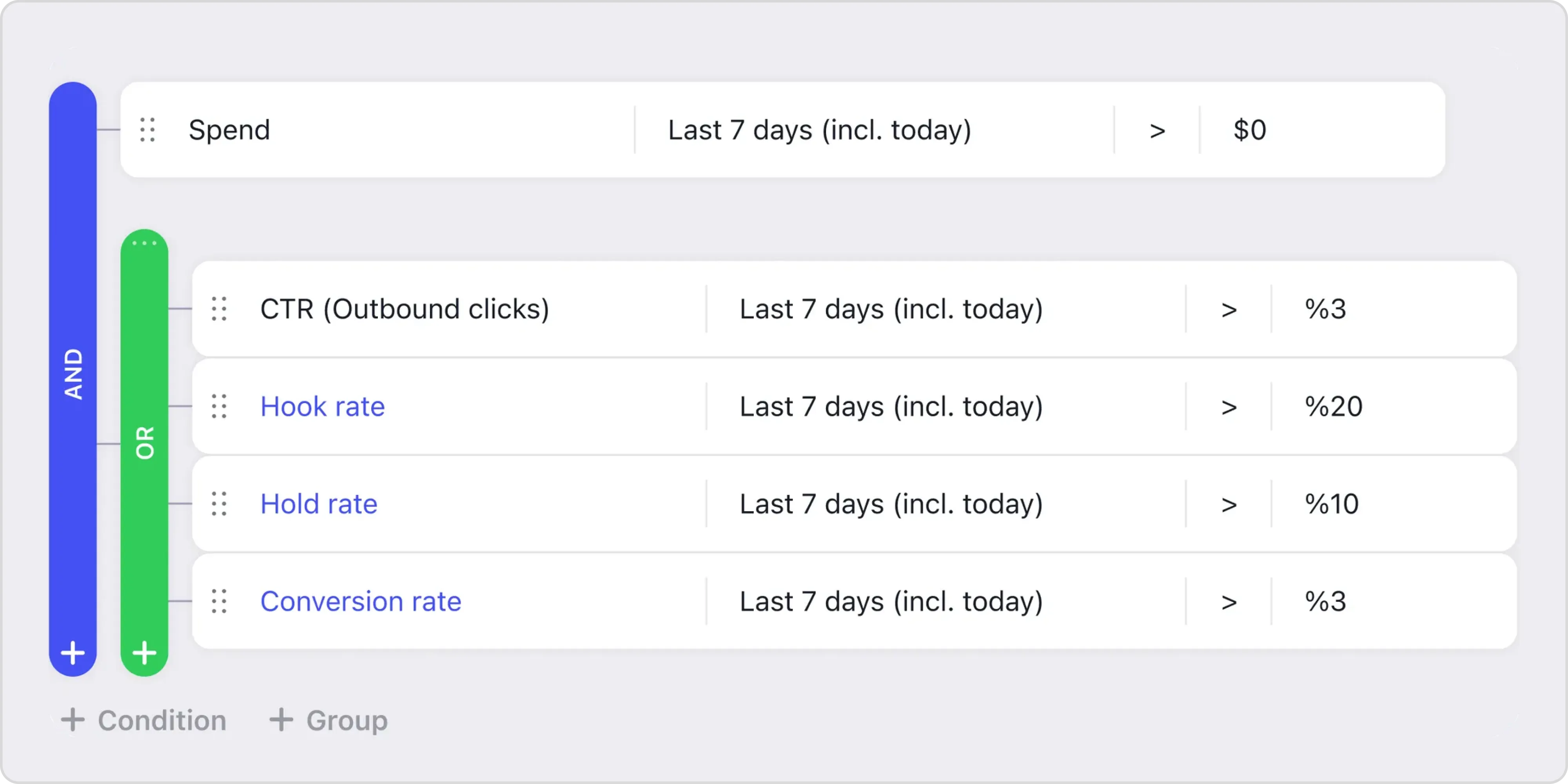

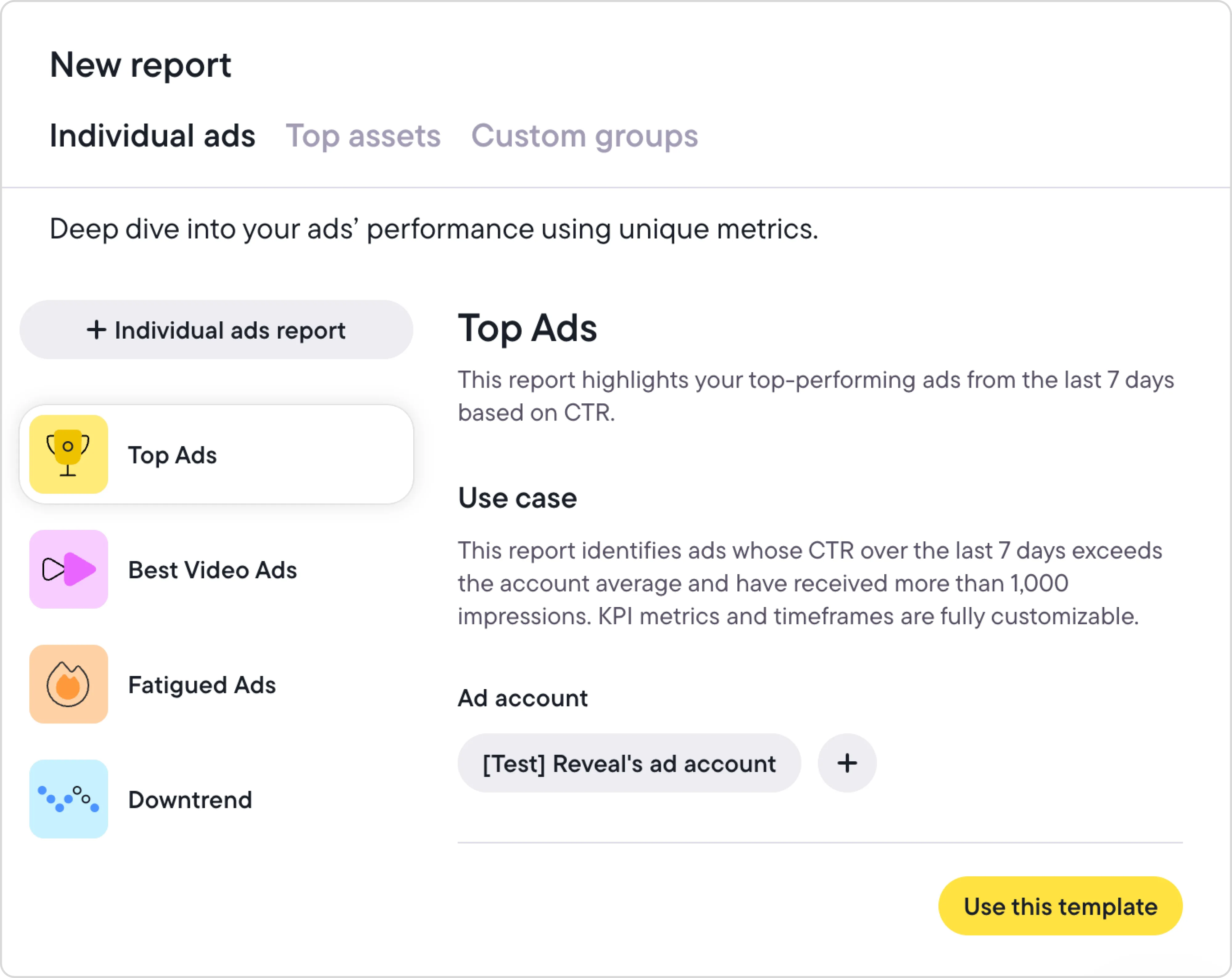

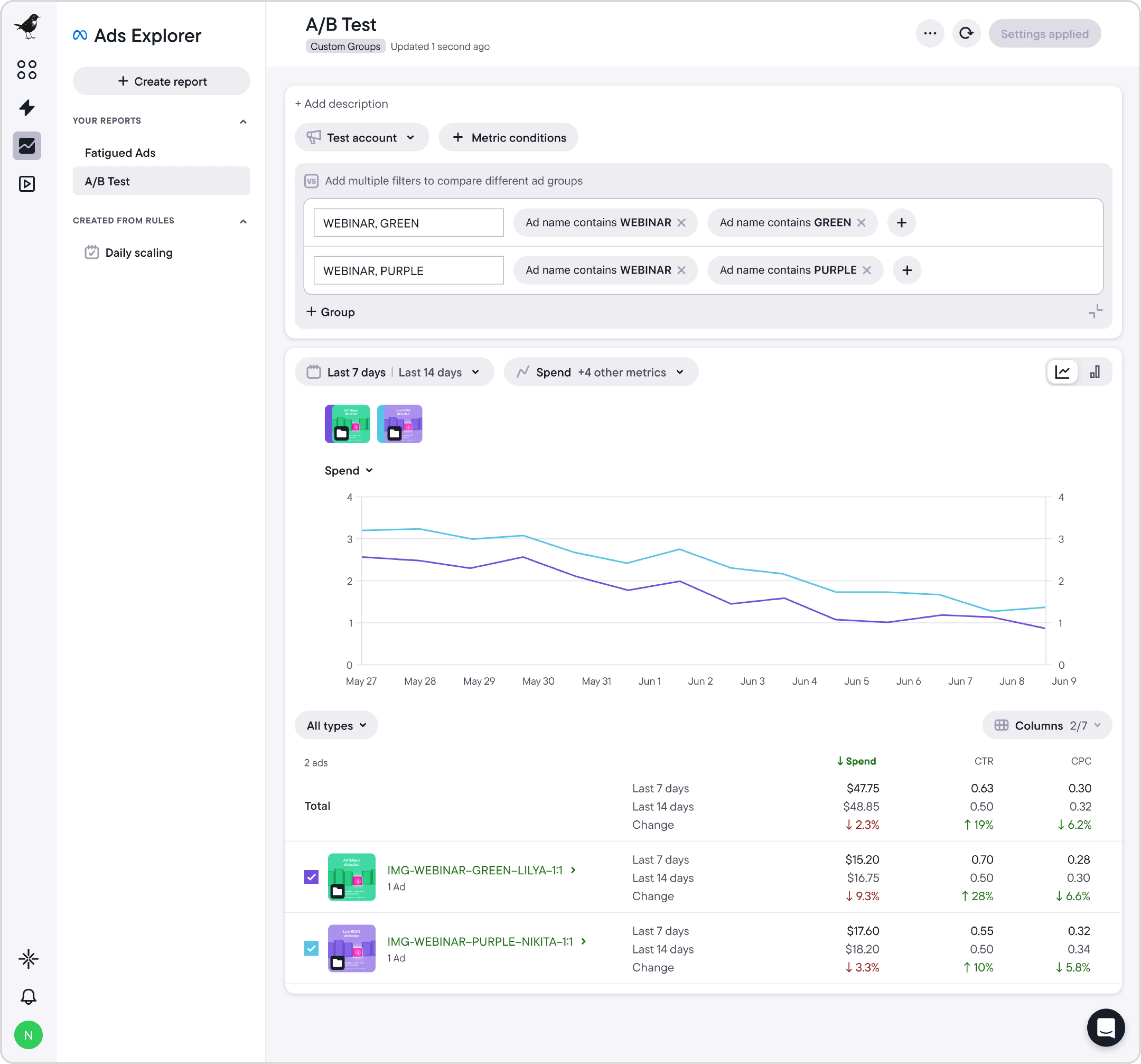

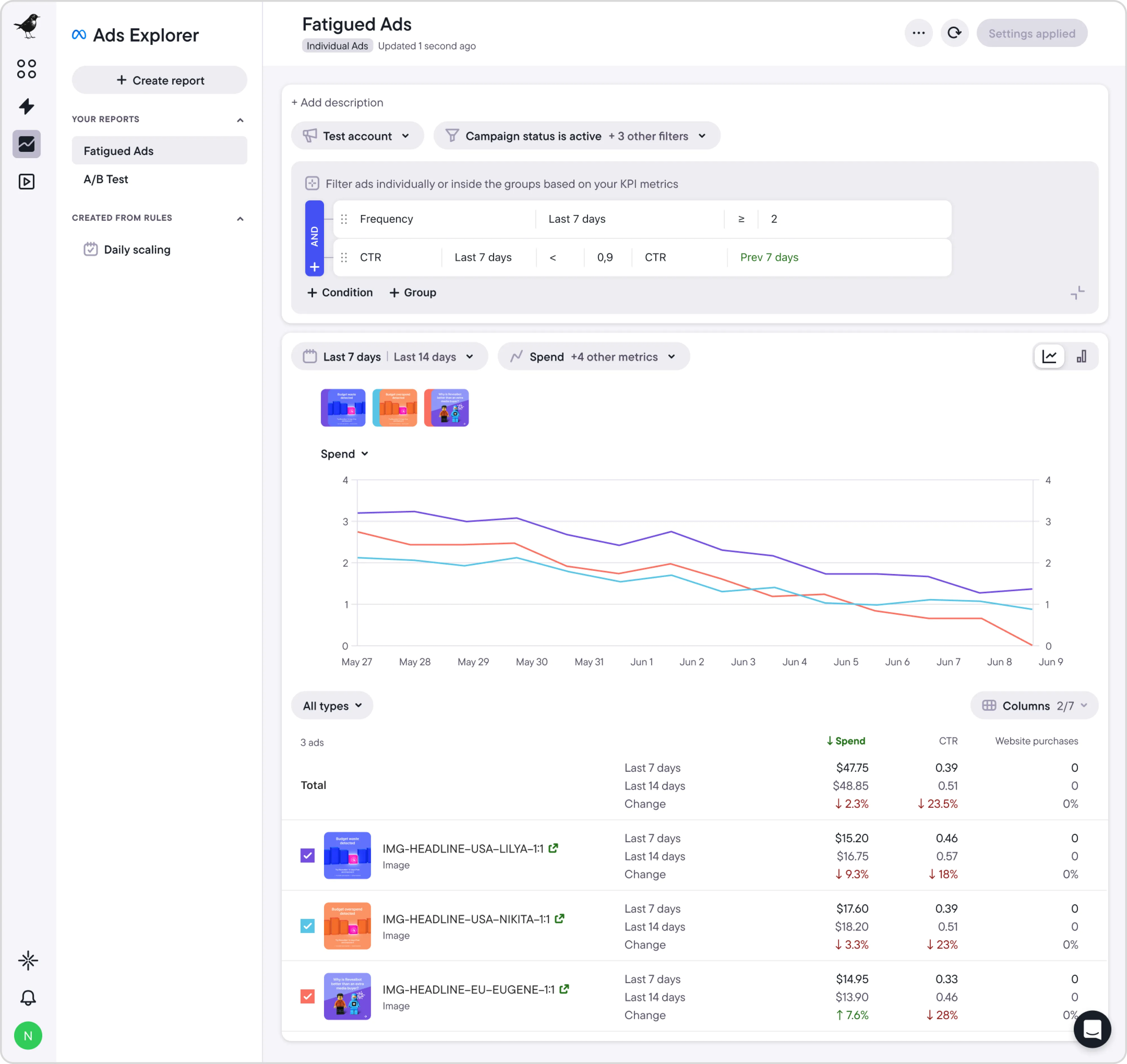

- Use Bïrch (formerly Revealbot) to automate testing, monitor fatigue, and extract insights at scale, so your creative engine runs continuously.

Why creative testing frameworks matter in 2025

Creative fatigue, audience fragmentation, and algorithm shifts have made creatives the most volatile—and valuable—lever in digital advertising. Bidding systems are increasingly automated. Targeting options are narrowing. What’s left is the creative itself: the message, hook, and visual frame.

Creative testing frameworks serve three essential functions:

Reduce performance volatility

- Regular testing ensures new high-performing assets enter rotation before fatigue hits.

- Testing pipelines smooth out week-to-week swings in CTR, CPA, and ROAS.

Improve budget efficiency

- Waste less spend on underperforming concepts by systematically identifying what works.

- Stronger creatives accelerate learning phases on platforms like Meta and TikTok.

Enable scalable growth

- Testing frameworks create predictable creative pipelines. Don’t scramble for new ideas. Instead, run structured sprints that produce testable variations on schedule.

- Learnings from one product or market can be quickly adapted across geos, audience segments, and platforms.

🔄 Without a testing framework, most teams alternate between over-reacting to short-term performance drops and under-reacting to creative fatigue signals.

A solid framework allows you to stay proactive. You’re not waiting for performance to drop before fixing creative—you’re constantly feeding the system with new, validated assets.

What is creative testing?

Creative testing is often confused with general A/B testing or ad hoc content swaps. A creative testing framework is more structured and deliberate.

At its core, creative testing is a systematic process for evaluating how different creative variables impact ad performance.

What it focuses on:

- The concept: Is the core idea resonating?

- The message: Are we framing benefits in a way that connects?

- The execution: Are visuals, copy, and formats maximizing attention and action?

The goal isn’t to pick the prettiest ad. It’s to identify which creative elements reliably move business outcomes like:

- Lower CPA

- Higher CTR

- Improved ROAS

- Stronger audience retention

The best-looking creative won’t necessarily be the best-performing. A successful creative earns attention and drives action—consistently.

Core elements of a high-performance creative testing framework

Effective creative testing starts with clear business alignment, prioritized hypotheses, and well-structured tests. Without these elements, testing is nothing more than random experimentation.

Setting clear testing goals and KPIs

Every test should tie in directly with business outcomes. Define what success looks like before you start.

Common KPI examples:

- Top of funnel: CTR, video view rate, thumb stop ratio

- Consideration stage: add-to-cart rate, lead conversion rate

- Purchase stage: CPA, ROAS, revenue per impression

If your goal is to lower acquisition cost, optimize for CPA—not just CTR or engagement.

Clearly defined KPIs:

- Guide creative briefs

- Inform test design

- Make success measurable

Prioritizing hypotheses based on impact

Prioritize based on:

- Impact potential: Which message or creative element could realistically move KPIs?

- Effort required: What’s feasible within your production cycle?

- Learning value: Will this test teach you something useful for future briefs?

Sample hypothesis format: “We believe demonstrating social proof upfront will increase CTR by 15% compared to product-only creatives.”

Structuring tests: A/B, multivariate, concept-level

A/B testing—simple head-to-head comparison. Best for:

- Isolating one variable (e.g., headline, CTA, hook)

- Getting a quick read on major directional changes

Multivariate testing (MVT)—testing multiple variables simultaneously. Best for:

- Early-stage discovery across message combinations

- High-volume accounts with sufficient data to power complex splits

Concept-level testing—compares entire creative approaches (e.g., emotional vs. rational messaging). Best for:

- Early-stage creative strategy development

- Large-scale refresh cycles

Building testable ad variations

Once you have defined your testing goals and hypotheses, the next step is to design ad variations that isolate learning points. This is where creative testing moves from theory into execution.

Concept vs. variation testing

Think of creative testing as having two layers: concept testing and variation testing.

Concept testing

- Test different big ideas (e.g., emotional appeal vs. product benefit)

- Offers high learning value early in a campaign cycle

Variation testing

- Test smaller execution tweaks within a proven concept

- Headlines, CTA phrasing, image selection, video pacing

Start broad with concept tests. Once a direction shows promise, shift into variation testing to refine performance.

Testing copy, visuals, and formats separately

Isolate single creative elements to avoid false conclusions.

- Copy: headline structure, benefit framing, CTA language

- Visuals: static vs. video, UGC vs. polished, product focus vs. lifestyle

- Format: aspect ratio, platform-native templates, interactive elements

Example: When testing copy such as “Free trial” vs. “Try free for 30 days,” use identical visuals to isolate the effect of the copy.

Balancing branded templates with new creative ideas

Branded templates bring consistency to creative testing but can limit exploration. Build a testing library that includes:

- Core templates for always-on campaigns

- Experimental variations to probe new angles

- Seasonal or event-based concepts to refresh fatigue cycles

Use templates as a stable foundation, but keep feeding new ideas into the system.

Launching and managing creative tests

Strong creative matters—but how you test it determines whether you learn something useful or just burn a budget.

Budget allocation and test duration

Budget matters for data quality. Underfunded tests often produce inconclusive results.

- Allocate enough budget per variant to reach statistical confidence

- Avoid testing too many variants at once (2–4 variants per test cycle is often optimal)

- Let tests run long enough to stabilize, but not so long that results lose relevance

Best practice: Aim for ~1,000 conversions or ~10,000 impressions per variant for early directional reads. For stronger statistical confidence, scale volume as budget allows.

Traffic segmentation (geo, platform, and audience)

Split audiences carefully to avoid contaminating test results:

- Isolate traffic by geography if cultural context might affect results.

- Run platform-specific tests separately (Meta vs. TikTok vs. YouTube).

- Control for audience overlap to prevent spillover bias.

Example: Testing a “free trial” message in both the US and Germany might produce different results—not because of the creative itself, but because of local purchasing behavior.

Avoiding overlap and data contamination

The more overlapping campaigns or conflicting signals you run, the harder it becomes to interpret outcomes.

Common contamination risks:

- Simultaneous brand vs. direct response campaigns targeting the same users

- Retargeting pools overlapping with prospecting tests

- Platform algorithms reallocating budget unevenly mid-test

Solutions:

- Use dedicated test budgets where possible

- Limit simultaneous experiments within overlapping cohorts

- Apply frequency caps and pacing controls to balance delivery

Analyzing results and scaling winners

Creative tests are only valuable if you can read the results correctly—and use them to inform actionable next steps. This phase separates real learnings from empty data.

Evaluating statistical significance

Not every lift is meaningful. Before declaring a “winner,” check:

- Sample size: Did you collect enough impressions or conversions?

- Variance: Are differences stable or fluctuating day to day?

- Confidence levels: Aim for 90–95% confidence when possible.

A 0.3% CTR difference on 500 impressions is likely noise. But a 1.2% lift on 50,000 impressions could signal a real performance shift.

Interpreting creative insights vs. channel noise

Platform algorithms introduce variability through:

- Delivery timing

- Auction dynamics

- Audience rotation

Separate real creative learning from platform effects by:

- Comparing multiple time windows

- Looking at consistent trends across platforms

- Pairing quantitative results with qualitative creative review

Example: If a “testimonial” hook consistently outperforms across both Meta and YouTube, it’s likely a creative insight—not just a platform quirk. Whereas, if it only performs well on YouTube, channel-specific dynamics may be at play.

Systematizing learnings into creative briefs and templates

Developing an effective testing framework is about building a creative knowledge base—not just picking winning elements.

Systematize your learnings after each test cycle by:

- Documenting what worked (and why)

- Tagging assets by concept, hook, and audience segment

- Feeding learnings directly into your next creative brief

Advanced creative testing tactics and trends for 2026

As platforms evolve and creative complexity grows, performance marketers are adopting more sophisticated methods to scale creative testing as a system. Experimentation isn’t enough.

Machine-learning-powered creative scoring

AI tools now allow teams to analyze creative assets and identify patterns across large data sets, revealing more than simple A/B results.

Examples of ML-powered scoring:

- Identifying which hook types (testimonial, product demo, emotional trigger) lift CTR across multiple markets

- Scoring ad variants on predicted fatigue timelines

- Detecting the combinations of copy, visuals, and formats that correlate with ROAS improvements

Frameworks for continuous testing at scale

Instead of seasonal “creative refreshes,” high-performing teams now run continuous testing loops:

- Small batch tests launched weekly or biweekly

- Rolling in new variants while retiring fatigued assets

- Prioritizing tests by business impact and audience segment gaps

Benefits:

- Detect fatigue faster

- Steady pipeline of new assets

- More stable performance over time

Aligning testing cadence with media buying cycles

Testing should support—not disrupt—media buying rhythms.

Example sync points:

- New creative batches aligned with budget ramp periods

- Extra testing during major seasonal promos

- Test pauses during conversion-sensitive windows (e.g., BFCM)

The goal is to balance learning with stability—testing when it’s safe to do so, and prioritizing performance when needed.

Common creative testing mistakes (and how to avoid them)

Even structured creative testing can go off track if you’re not careful. Here are some of the most frequent pitfalls and how to design your framework to prevent them.

Misreading early signals

The mistake:

- Declaring winners too soon based on small data sets

- Overreacting to short-term performance spikes or drops

Solution:

- Set minimum impression or conversion thresholds before analyzing

- Look for stability across multiple days or budget cycles

- Use confidence intervals, not just raw deltas

Testing too many variables at once

The mistake:

- Changing headlines, visuals, formats, and offers simultaneously

- Not being able to attribute results to any one change

Solution:

- Isolate one primary variable per test phase (e.g., headline OR format)

- Use multivariate designs only when budget and volume support them

- Break complex tests into phased iterations

Creative fatigue and misattribution

The mistake:

- Interpreting fatigue-driven declines as creative failure

- Scaling assets for too long without monitoring decay

Solution:

- Track fatigue curves for each asset type

- Rotate creatives proactively before full decay sets in

- Use frequency caps and decay indicators in reporting

Establish a repeatable creative testing engine with Bïrch

Creative testing isn’t about guesswork—it’s a repeatable system for finding what works and scaling it. When you test against clear goals and strong hypotheses, creative becomes a driver of predictable growth.

Ready to take the guesswork out of your creative testing? Bïrch gives your team a reliable engine to test creatives smarter, iterate faster, and scale with confidence.

FAQs

What’s the difference between A/B testing and multivariate testing?

A/B testing isolates one variable (e.g., headline vs. headline) to see which performs better. Multivariate testing evaluates multiple variables (headline + image + CTA) at the same time. It requires much larger sample sizes for reliable results.

How many ad creatives should I test at once?

Generally, two to four variants per test cycle balances learning speed with statistical power. More variants dilute budget and may delay clear results.

What’s the minimum budget for statistically significant results?

While it varies by KPI, a good rule is to reach ~1,000 conversions or ~10,000+ impressions per variant before making decisions. Smaller samples risk false positives.

What tools help streamline the creative testing process?

- Bïrch: full-cycle creative testing framework with automation, scoring, and real-time reporting

- Meta Ads Manager: basic delivery insights

- Looker/Google Sheets: custom dashboards

- Slack: real-time performance alerts integrated via Bïrch

What happened to Revealbot?

Revealbot underwent a comprehensive rebrand and is now known as Bïrch. This transformation reflects our renewed focus on blending automation efficiency with creative collaboration.

With algorithms handling much of the bidding and targeting, ad creative testing is often where meaningful gains are made. The problem is that most testing approaches are random, fragmented, or too slow to drive consistent lift.

Let’s break down how to structure tests, set KPIs, build variations, and scale winning concepts. If you manage ad spend across Meta, TikTok, YouTube, or programmatic channels, this framework will help you test faster, learn smarter, and optimize creatives at scale.

Key takeaways

- As algorithms automate bidding and targeting, testing creative is where marketers win or lose.

- A testing framework beats random experimentation. It brings structure, repeatability, and better learning across campaigns and channels.

- Clear KPIs make creative testing actionable. Tie each test to business goals like CTR, CPA, or ROAS to guide decision-making.

- Start with big ideas, then optimize details. Test concepts first, then iterate on copy, visuals, and format once direction is validated.

- Avoid common pitfalls, like testing too many variables, misreading early signals, or letting fatigue skew results.

- Use Bïrch (formerly Revealbot) to automate testing, monitor fatigue, and extract insights at scale, so your creative engine runs continuously.

Why creative testing frameworks matter in 2025

Creative fatigue, audience fragmentation, and algorithm shifts have made creatives the most volatile—and valuable—lever in digital advertising. Bidding systems are increasingly automated. Targeting options are narrowing. What’s left is the creative itself: the message, hook, and visual frame.

Creative testing frameworks serve three essential functions:

Reduce performance volatility

- Regular testing ensures new high-performing assets enter rotation before fatigue hits.

- Testing pipelines smooth out week-to-week swings in CTR, CPA, and ROAS.

Improve budget efficiency

- Waste less spend on underperforming concepts by systematically identifying what works.

- Stronger creatives accelerate learning phases on platforms like Meta and TikTok.

Enable scalable growth

- Testing frameworks create predictable creative pipelines. Don’t scramble for new ideas. Instead, run structured sprints that produce testable variations on schedule.

- Learnings from one product or market can be quickly adapted across geos, audience segments, and platforms.

🔄 Without a testing framework, most teams alternate between over-reacting to short-term performance drops and under-reacting to creative fatigue signals.

A solid framework allows you to stay proactive. You’re not waiting for performance to drop before fixing creative—you’re constantly feeding the system with new, validated assets.

What is creative testing?

Creative testing is often confused with general A/B testing or ad hoc content swaps. A creative testing framework is more structured and deliberate.

At its core, creative testing is a systematic process for evaluating how different creative variables impact ad performance.

What it focuses on:

- The concept: Is the core idea resonating?

- The message: Are we framing benefits in a way that connects?

- The execution: Are visuals, copy, and formats maximizing attention and action?

The goal isn’t to pick the prettiest ad. It’s to identify which creative elements reliably move business outcomes like:

- Lower CPA

- Higher CTR

- Improved ROAS

- Stronger audience retention

The best-looking creative won’t necessarily be the best-performing. A successful creative earns attention and drives action—consistently.

Core elements of a high-performance creative testing framework

Effective creative testing starts with clear business alignment, prioritized hypotheses, and well-structured tests. Without these elements, testing is nothing more than random experimentation.

Setting clear testing goals and KPIs

Every test should tie in directly with business outcomes. Define what success looks like before you start.

Common KPI examples:

- Top of funnel: CTR, video view rate, thumb stop ratio

- Consideration stage: add-to-cart rate, lead conversion rate

- Purchase stage: CPA, ROAS, revenue per impression

If your goal is to lower acquisition cost, optimize for CPA—not just CTR or engagement.

Clearly defined KPIs:

- Guide creative briefs

- Inform test design

- Make success measurable

Prioritizing hypotheses based on impact

Prioritize based on:

- Impact potential: Which message or creative element could realistically move KPIs?

- Effort required: What’s feasible within your production cycle?

- Learning value: Will this test teach you something useful for future briefs?

Sample hypothesis format: “We believe demonstrating social proof upfront will increase CTR by 15% compared to product-only creatives.”

Structuring tests: A/B, multivariate, concept-level

A/B testing—simple head-to-head comparison. Best for:

- Isolating one variable (e.g., headline, CTA, hook)

- Getting a quick read on major directional changes

Multivariate testing (MVT)—testing multiple variables simultaneously. Best for:

- Early-stage discovery across message combinations

- High-volume accounts with sufficient data to power complex splits

Concept-level testing—compares entire creative approaches (e.g., emotional vs. rational messaging). Best for:

- Early-stage creative strategy development

- Large-scale refresh cycles

Building testable ad variations

Once you have defined your testing goals and hypotheses, the next step is to design ad variations that isolate learning points. This is where creative testing moves from theory into execution.

Concept vs. variation testing

Think of creative testing as having two layers: concept testing and variation testing.

Concept testing

- Test different big ideas (e.g., emotional appeal vs. product benefit)

- Offers high learning value early in a campaign cycle

Variation testing

- Test smaller execution tweaks within a proven concept

- Headlines, CTA phrasing, image selection, video pacing

Start broad with concept tests. Once a direction shows promise, shift into variation testing to refine performance.

Testing copy, visuals, and formats separately

Isolate single creative elements to avoid false conclusions.

- Copy: headline structure, benefit framing, CTA language

- Visuals: static vs. video, UGC vs. polished, product focus vs. lifestyle

- Format: aspect ratio, platform-native templates, interactive elements

Example: When testing copy such as “Free trial” vs. “Try free for 30 days,” use identical visuals to isolate the effect of the copy.

Balancing branded templates with new creative ideas

Branded templates bring consistency to creative testing but can limit exploration. Build a testing library that includes:

- Core templates for always-on campaigns

- Experimental variations to probe new angles

- Seasonal or event-based concepts to refresh fatigue cycles

Use templates as a stable foundation, but keep feeding new ideas into the system.

Launching and managing creative tests

Strong creative matters—but how you test it determines whether you learn something useful or just burn a budget.

Budget allocation and test duration

Budget matters for data quality. Underfunded tests often produce inconclusive results.

- Allocate enough budget per variant to reach statistical confidence

- Avoid testing too many variants at once (2–4 variants per test cycle is often optimal)

- Let tests run long enough to stabilize, but not so long that results lose relevance

Best practice: Aim for ~1,000 conversions or ~10,000 impressions per variant for early directional reads. For stronger statistical confidence, scale volume as budget allows.

Traffic segmentation (geo, platform, and audience)

Split audiences carefully to avoid contaminating test results:

- Isolate traffic by geography if cultural context might affect results.

- Run platform-specific tests separately (Meta vs. TikTok vs. YouTube).

- Control for audience overlap to prevent spillover bias.

Example: Testing a “free trial” message in both the US and Germany might produce different results—not because of the creative itself, but because of local purchasing behavior.

Avoiding overlap and data contamination

The more overlapping campaigns or conflicting signals you run, the harder it becomes to interpret outcomes.

Common contamination risks:

- Simultaneous brand vs. direct response campaigns targeting the same users

- Retargeting pools overlapping with prospecting tests

- Platform algorithms reallocating budget unevenly mid-test

Solutions:

- Use dedicated test budgets where possible

- Limit simultaneous experiments within overlapping cohorts

- Apply frequency caps and pacing controls to balance delivery

Analyzing results and scaling winners

Creative tests are only valuable if you can read the results correctly—and use them to inform actionable next steps. This phase separates real learnings from empty data.

Evaluating statistical significance

Not every lift is meaningful. Before declaring a “winner,” check:

- Sample size: Did you collect enough impressions or conversions?

- Variance: Are differences stable or fluctuating day to day?

- Confidence levels: Aim for 90–95% confidence when possible.

A 0.3% CTR difference on 500 impressions is likely noise. But a 1.2% lift on 50,000 impressions could signal a real performance shift.

Interpreting creative insights vs. channel noise

Platform algorithms introduce variability through:

- Delivery timing

- Auction dynamics

- Audience rotation

Separate real creative learning from platform effects by:

- Comparing multiple time windows

- Looking at consistent trends across platforms

- Pairing quantitative results with qualitative creative review

Example: If a “testimonial” hook consistently outperforms across both Meta and YouTube, it’s likely a creative insight—not just a platform quirk. Whereas, if it only performs well on YouTube, channel-specific dynamics may be at play.

Systematizing learnings into creative briefs and templates

Developing an effective testing framework is about building a creative knowledge base—not just picking winning elements.

Systematize your learnings after each test cycle by:

- Documenting what worked (and why)

- Tagging assets by concept, hook, and audience segment

- Feeding learnings directly into your next creative brief

Advanced creative testing tactics and trends for 2026

As platforms evolve and creative complexity grows, performance marketers are adopting more sophisticated methods to scale creative testing as a system. Experimentation isn’t enough.

Machine-learning-powered creative scoring

AI tools now allow teams to analyze creative assets and identify patterns across large data sets, revealing more than simple A/B results.

Examples of ML-powered scoring:

- Identifying which hook types (testimonial, product demo, emotional trigger) lift CTR across multiple markets

- Scoring ad variants on predicted fatigue timelines

- Detecting the combinations of copy, visuals, and formats that correlate with ROAS improvements

Frameworks for continuous testing at scale

Instead of seasonal “creative refreshes,” high-performing teams now run continuous testing loops:

- Small batch tests launched weekly or biweekly

- Rolling in new variants while retiring fatigued assets

- Prioritizing tests by business impact and audience segment gaps

Benefits:

- Detect fatigue faster

- Steady pipeline of new assets

- More stable performance over time

Aligning testing cadence with media buying cycles

Testing should support—not disrupt—media buying rhythms.

Example sync points:

- New creative batches aligned with budget ramp periods

- Extra testing during major seasonal promos

- Test pauses during conversion-sensitive windows (e.g., BFCM)

The goal is to balance learning with stability—testing when it’s safe to do so, and prioritizing performance when needed.

Common creative testing mistakes (and how to avoid them)

Even structured creative testing can go off track if you’re not careful. Here are some of the most frequent pitfalls and how to design your framework to prevent them.

Misreading early signals

The mistake:

- Declaring winners too soon based on small data sets

- Overreacting to short-term performance spikes or drops

Solution:

- Set minimum impression or conversion thresholds before analyzing

- Look for stability across multiple days or budget cycles

- Use confidence intervals, not just raw deltas

Testing too many variables at once

The mistake:

- Changing headlines, visuals, formats, and offers simultaneously

- Not being able to attribute results to any one change

Solution:

- Isolate one primary variable per test phase (e.g., headline OR format)

- Use multivariate designs only when budget and volume support them

- Break complex tests into phased iterations

Creative fatigue and misattribution

The mistake:

- Interpreting fatigue-driven declines as creative failure

- Scaling assets for too long without monitoring decay

Solution:

- Track fatigue curves for each asset type

- Rotate creatives proactively before full decay sets in

- Use frequency caps and decay indicators in reporting

Establish a repeatable creative testing engine with Bïrch

Creative testing isn’t about guesswork—it’s a repeatable system for finding what works and scaling it. When you test against clear goals and strong hypotheses, creative becomes a driver of predictable growth.

Ready to take the guesswork out of your creative testing? Bïrch gives your team a reliable engine to test creatives smarter, iterate faster, and scale with confidence.

FAQs

What’s the difference between A/B testing and multivariate testing?

A/B testing isolates one variable (e.g., headline vs. headline) to see which performs better. Multivariate testing evaluates multiple variables (headline + image + CTA) at the same time. It requires much larger sample sizes for reliable results.

How many ad creatives should I test at once?

Generally, two to four variants per test cycle balances learning speed with statistical power. More variants dilute budget and may delay clear results.

What’s the minimum budget for statistically significant results?

While it varies by KPI, a good rule is to reach ~1,000 conversions or ~10,000+ impressions per variant before making decisions. Smaller samples risk false positives.

What tools help streamline the creative testing process?

- Bïrch: full-cycle creative testing framework with automation, scoring, and real-time reporting

- Meta Ads Manager: basic delivery insights

- Looker/Google Sheets: custom dashboards

- Slack: real-time performance alerts integrated via Bïrch

What happened to Revealbot?

Revealbot underwent a comprehensive rebrand and is now known as Bïrch. This transformation reflects our renewed focus on blending automation efficiency with creative collaboration.