The advertising industry has gone through significant changes in recent years. Platforms move faster, audiences are unpredictable, and performance signals shift quickly. It’s more important than ever for advertisers to understand how different strategies impact sales so they can make more informed decisions.

And while many factors contribute to a campaign’s overall success, creative testing is still key to performance. According to Nielsen research, creative contributes 47% of ad effectiveness, making it the most important element attributed to sales lift in successful campaigns.

Despite its importance, creative testing is fragile. Without clear structure, it’s hard to scale, easy to misinterpret, and often leads to underperforming ads and missed learning over time.

In this article, we’ll talk in depth about how manual creative testing can hurt ad performance—and how automation can improve testing, insights, and scalability.

Key takeaways

- Manual creative testing struggles at scale because it lacks the structure needed to evaluate performance consistently across campaigns and accounts.

- When infrastructure is missing, creative testing becomes reactive. Decisions lag behind performance changes, and wasted spend increases as issues are caught too late.

- Uneven delivery and manual reviews make fair comparisons difficult, especially as creative volume and account complexity grow.

- Automation turns creative testing into a continuous process, allowing teams to act on performance signals while they still matter.

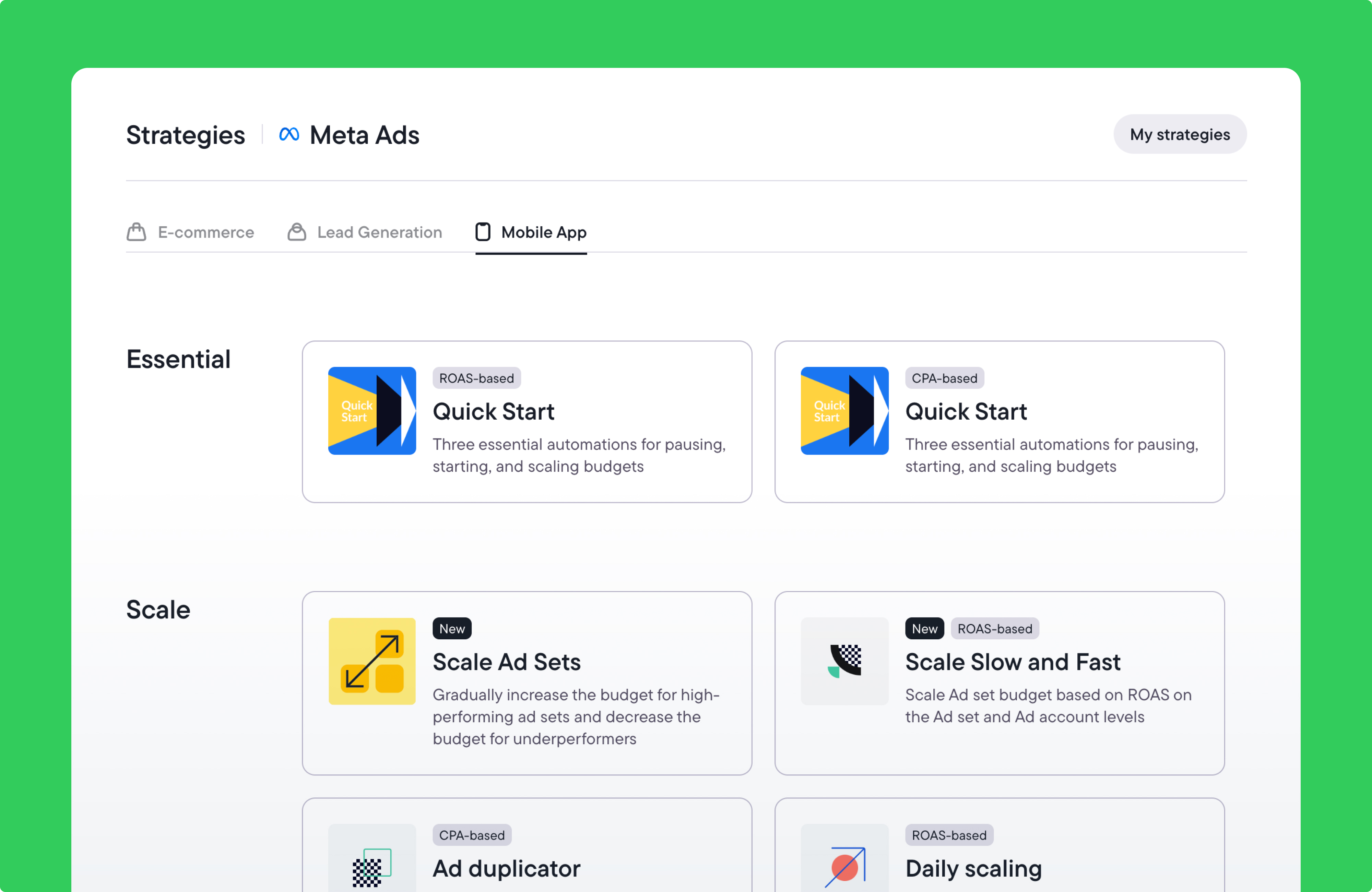

- Bïrch’s Launcher feature standardizes how creative tests are executed, while Explorer aggregates and analyzes results across campaigns—making it easier to identify patterns and apply learnings consistently.

The real creative testing problem no one talks about: infrastructure

One of the reasons why your creative testing efforts might be failing is the lack of infrastructure behind them. This can make testing inconsistent and difficult to scale.

If you’re only running a few ads with a limited budget, manual creative testing can be effective because you can monitor performance closely and make quick decisions when needed.

However, as campaigns grow, your testing approach may become harder to maintain. Without a clear structure in place, performance data ends up dispersed across disconnected dashboards, reports, and ad accounts. Teams manually pull metrics, compare results at different points in time, and reconcile numbers that were never designed to be evaluated together.

Inconsistency arises from the lack of a shared creative testing framework. Creatives are launched under different conditions, reviewed using different metrics, and evaluated at different stages of delivery.

Without a standard infrastructure, each metric is interpreted in isolation.

And when insights feel shallow or repetitive, it’s often because there’s no system capturing learnings in a way that makes them reusable.

Not having a solid infrastructure makes creative testing expensive. You might see this in wasted media spend. Budget will keep flowing to underperforming creatives if you don’t catch them early enough.

Less visible, but often more costly, is the loss of learning. When results aren’t evaluated consistently, it’s impossible to tell reliably what caused a creative to fail. Was it the message, the format, the audience, or the timing?

In this context, scaling creative output becomes a gamble. Without reliable signals, teams can’t scale with confidence and risk increasing cost without improving results.

Is manual creative testing diminishing your growth?

In many teams, manual creative testing quietly limits growth by slowing decisions and delaying learning.

Creatives are launched across campaigns or ad sets and are left to run for a period of time. Performance is then reviewed using certain metrics, typically CTR, CPA, or ROAS. Based on those results, actions are taken.

This cycle repeats week after week. Teams wait for enough data to form conclusions, review performance after the fact, and make changes once trends are already visible.

Here’s where it gets tricky.

By the time teams take action, a creative may already be losing efficiency or showing early signs of fatigue. At the same time, with manual creative testing, creatives rarely receive delivery evenly. Without proper controls, performance comparisons become unreliable.

As a result, teams end up comparing assets that ran under different conditions—budgets, audiences, timeframes, or placements—which can lead to misleading conclusions, even when the numbers look clear.

Manual testing is also prone to human error. Some fluctuations in performance can influence how a creative is labeled. Over time, this introduces inconsistency into the testing process, especially across different team members or accounts.

This is how manual creative testing starts limiting growth. When testing can’t keep pace with changing performance conditions, decisions become reactive, insights shallow, and scale increasingly difficult to manage with confidence.

Why creative testing needs to be automated

Manual processes simply weren’t designed to handle the volume, speed, and variability of modern paid media. As more creatives are launched across more campaigns, teams need ways to keep up without drowning in manual work.

This is where automation removes friction at execution—standardizing how tests are launched, monitored, and evaluated so teams can keep pace without losing control.

Automation contributes to creative intelligence: Automated creative testing evaluates performance using the same logic every time. Creatives are assessed against consistent criteria, and decisions don’t fluctuate based on who happens to be checking the account that day or how performance was last reviewed.

Time saving and faster learning: Because access to platforms, formats, and audiences is largely the same, the real advantage comes from how quickly teams can learn from performance and apply those insights—something automation makes simpler.

Instead of waiting days to find potential issues, automation can catch performance drops within hours, allowing teams to act while results are still relevant. This leads to:

- Faster detection of meaningful performance changes

- More objective decision-making across teams and accounts

- High-velocity testing

Analysis at scale: With automation, it’s possible to analyze thousands of data points at scale, and identify which perform best. It helps you predict ad fatigue and take timely action.

Automation as an organizational asset: Insights from automated creative testing continue to guide teams long after campaigns end, and even when team members change. Besides informing ad decisions, creative performance data informs messaging, positioning, and broader growth strategies.

Real advertiser experiences: life before and after automation

We’ve asked several leaders to describe how they have automated creative testing and the impacts they have seen.

Stas Slota runs performance marketing agency LeaderPrivate, which focuses on affiliate-driven verticals. He describes a structured but hands-on testing process. His team typically tests 10–15 creatives per campaign, built around a small number of defined hooks.

They monitor performance manually until creative volume increases or campaigns expand across multiple accounts. That’s when automation becomes a necessity.

“We believe automation to be a great solution, especially for testing creatives at scale, because it saves budget by catching underperforming creatives in real time. When you’re running 50–70+ creatives across multiple ad accounts, it becomes physically impossible to quality-track everything manually. Automation allows you to set specific performance thresholds—say, CPC under $0.50, CPM under $50, and cost per lead under $10—and the system alerts you immediately when a creative falls outside those parameters, telling you exactly which creative in which account needs to be paused.”

For Ivan Vislavskiy at Comrade Digital Marketing Agency, the breaking point came as creative testing scaled across multiple clients and verticals.

Before automation, testing meant spreadsheets, screenshots, and long internal discussions about which ads “felt” stronger. But that dynamic changed once creative testing was automated. Wasted spend dropped, learning cycles accelerated, and teams were able to move to data-backed iteration.

“I still remember how messy it was before automation. We’d be buried in spreadsheets, digging through screenshots, jumping on Zoom calls trying to figure out which ad felt better. Everyone had an opinion, and it was just chaos. Now? It’s way smoother. We plug in the data, we see exactly what’s working with heatmaps, and we make decisions in minutes.”

David Hampian has led performance and integrated marketing teams across large organizations, and currently heads up Field Vision. He describes how manual creative testing simply stopped working as environments became faster and more complex.

Earlier in his career, creative testing was largely manual. It involved a limited set of ads, early performance signals, and decisions made in scheduled reviews. While that approach felt safe and controllable, it didn’t scale.

As teams grew, creative testing had to become automated and “always-on.” Instead of campaign-by-campaign reviews, systems were put in place to introduce new creative and surface patterns across performance data.

“Before automation, creative testing was episodic, slow, and heavily meeting-driven. After automation, it became continuous and embedded in how campaigns ran day to day. The biggest difference was not speed alone, but clarity. Automation made it easier to see what was working, apply those learnings to the next round of creative, and turn testing into a repeatable growth muscle instead of a one-off task.”

What automated creative testing looks like in practice

In the automated setup, the workflow starts with creative strategy: deciding what to test (new hooks, messages, formats, visuals).

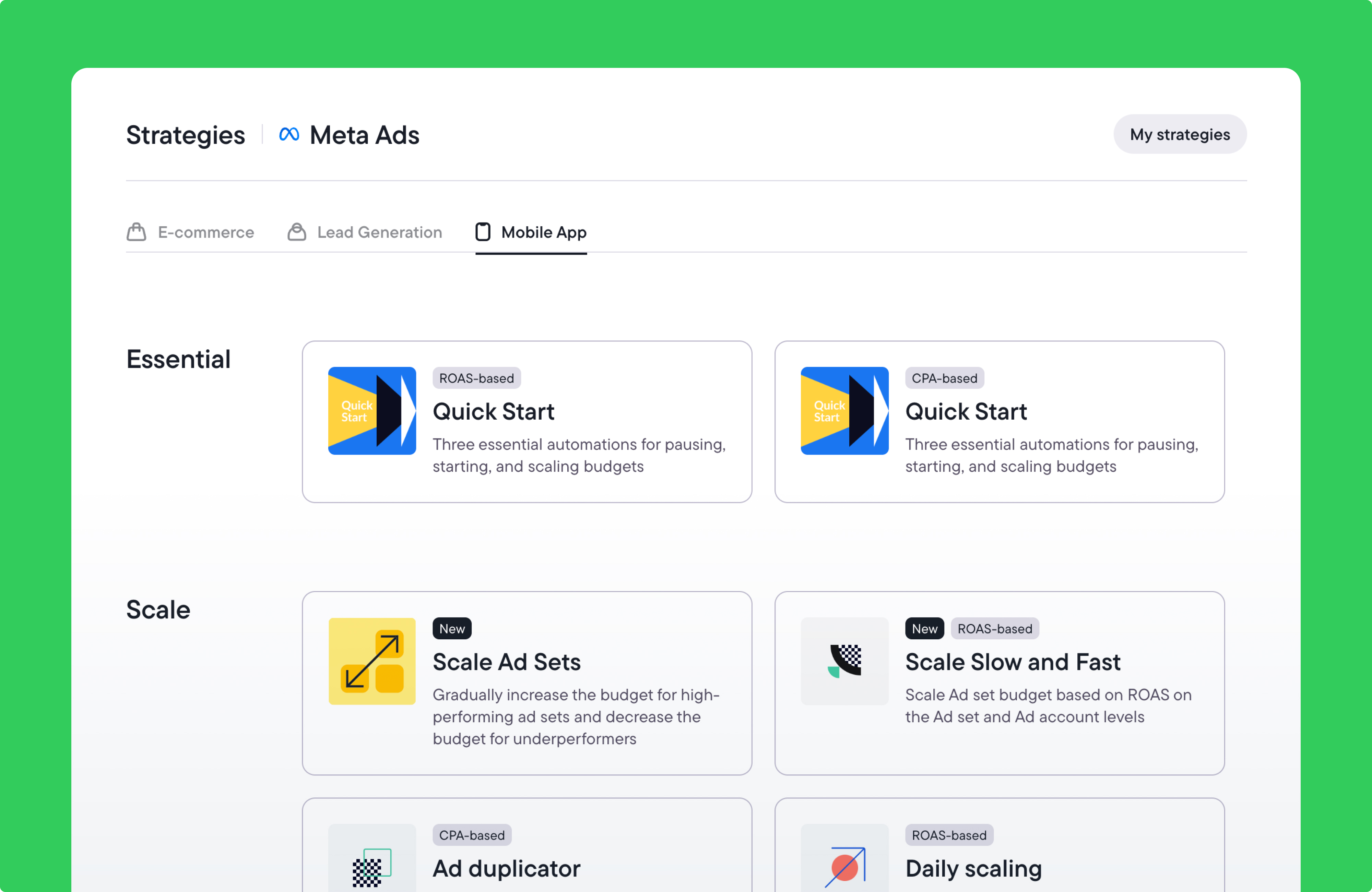

Using tools like Launcher, teams can generate and launch creative variations at scale while keeping structure intact.

A typical workflow looks like this:

First, creative assets are uploaded in bulk using Google Drive or local files. From there, structured creative variations are generated within a consistent framework, rather than spread across disconnected ad sets or spreadsheets.

Next, campaigns and ad sets are defined once. Ad set parameters are applied across all generated variations, ensuring each creative runs under comparable conditions.

Before launch, the setup is saved as a Launcher template. This creates a repeatable structure that can be reused across future tests, keeping execution consistent over time.

From this point on, the structured foundation makes it much easier and faster for teams to track performance and catch early signals such as underperformance.

Now, let’s consider potential scenarios and how they can impact your campaign outcomes.

Picture this. You launch a new set of creatives, and performance looks strong in the first few days. Engagement is high, costs are stable, and scaling feels safe. As the scale increases, results start to drop, and the creative that has been performing so well starts to lose momentum.

This is creative fatigue, and it’s one of the hardest things to manage manually.

In a manual setup, fatigue is usually identified after the performance has already dropped. By this time, the spend has already been wasted.

However, with automation in place, fatigued creatives can be paused or deprioritized as soon as early signals appear—allowing budget and delivery to shift quickly to fresher, higher-performing assets before waste adds up.

That kind of early protection is powerful on its own, but it’s only part of the picture.

Once testing becomes consistent and reliable, the focus naturally shifts from “which single ad won this round” to something much more powerful: understanding why certain elements work.

Structured testing at scale makes this possible. Instead of seeing which single ad performed best, teams begin to understand why certain messages, hooks, or formats work—and where those patterns repeat.

Those insights don’t have to stay confined to a single ad account. Messaging that performs well in paid social can inform landing pages, email campaigns, or even broader brand positioning. Creative testing stops being channel-specific and starts feeding into the overall marketing strategy.

But to turn consistent testing into repeatable insight, teams need visibility beyond individual ads.

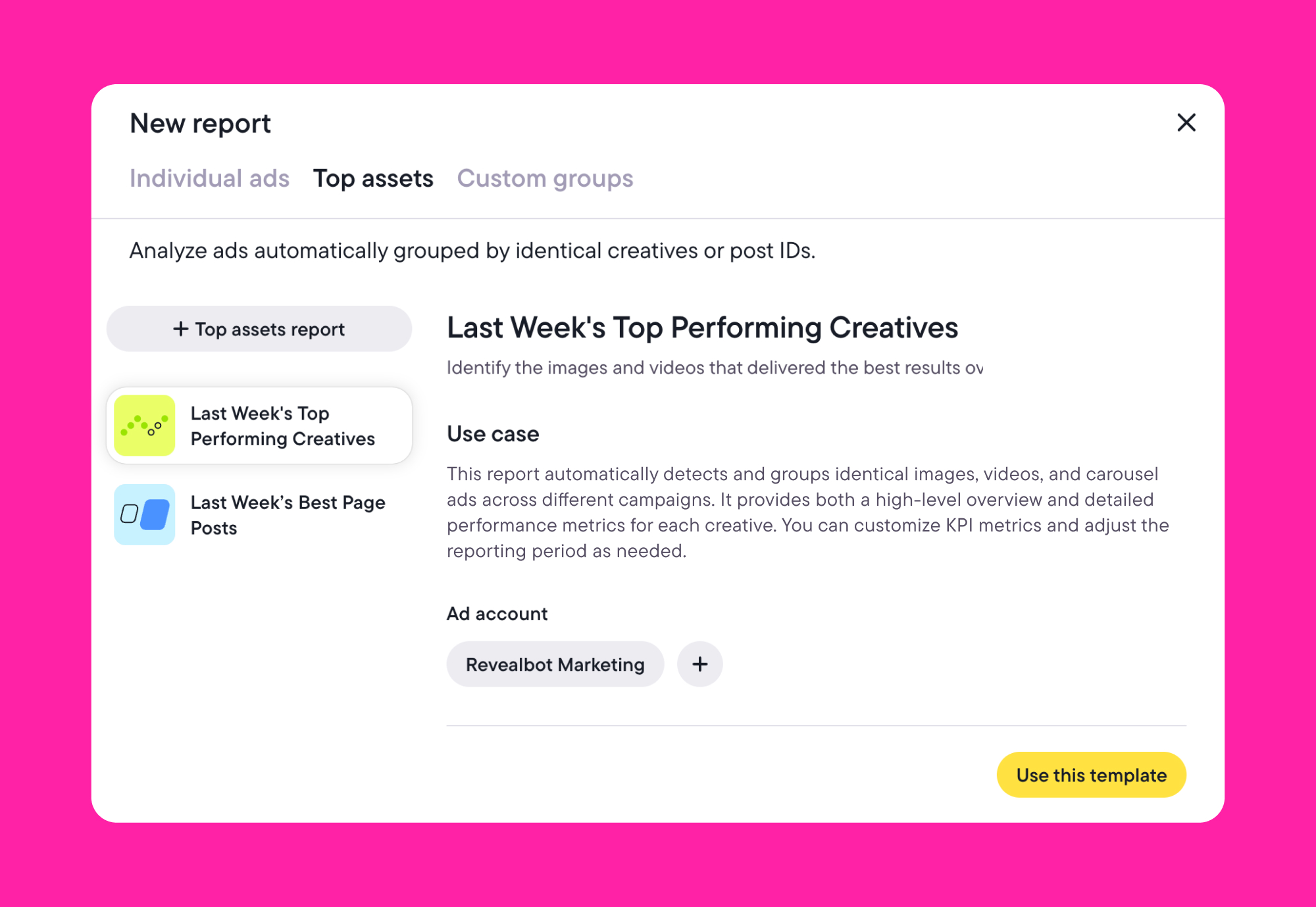

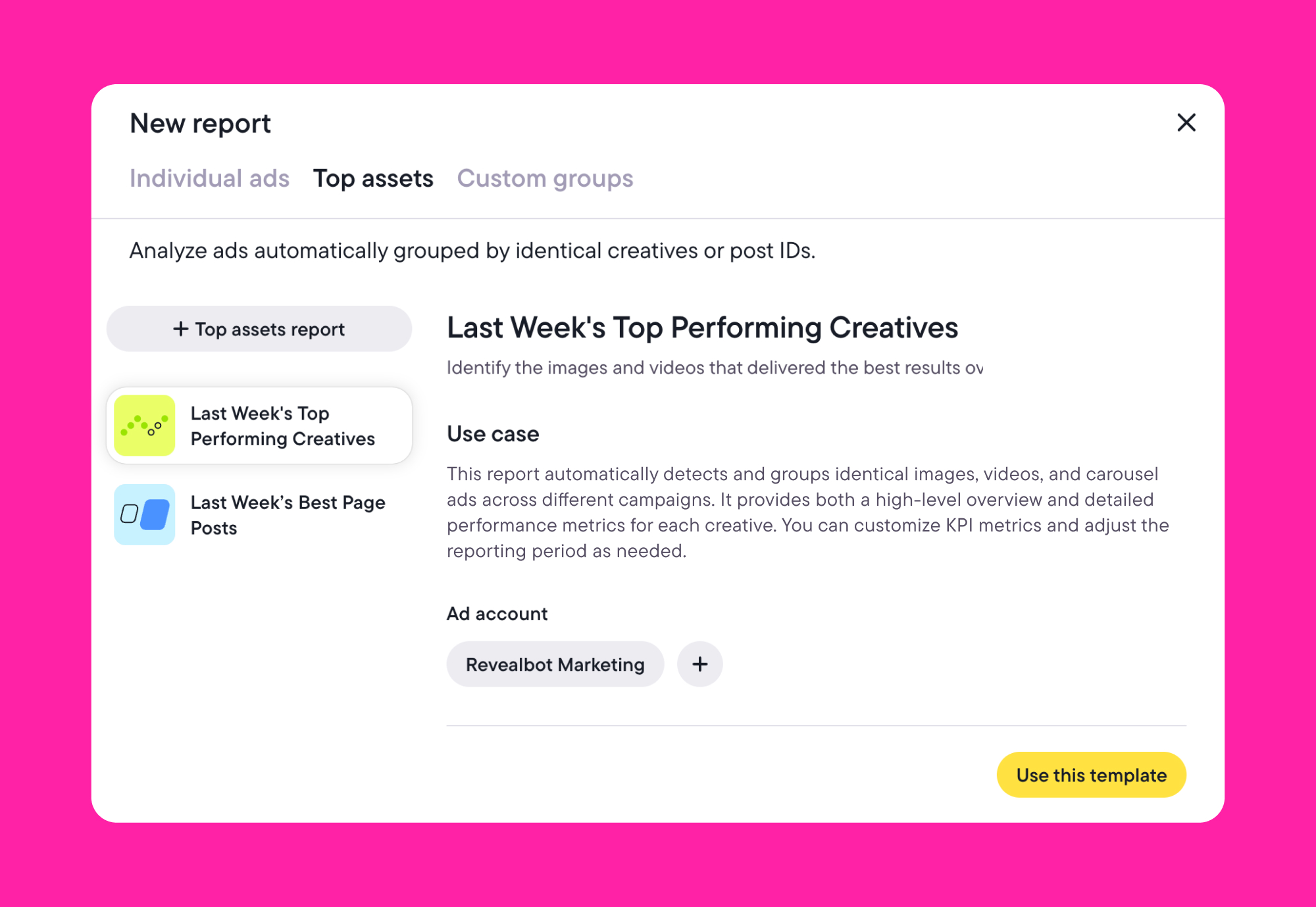

With tools like Bïrch Explorer, those insights can be analyzed across campaigns and accounts, making it easier to identify which themes, formats, or messages consistently drive results and apply those learnings across channels.

How to build a scalable creative testing infrastructure today

A scalable testing system creates rules for how testing happens and ensures decisions are made the same way each time. At a minimum, that means:

- Consistent evaluation logic: Creatives should be judged using the same criteria across campaigns, accounts, and timeframes. Without this, results are impossible to compare, and learnings don’t compound.

- Continuous monitoring: The system should evaluate performance as data comes in and act before inefficiencies grow.

- Automation at the execution layer: It’s challenging for humans to reach the capabilities of automated systems when monitoring performance at scale. Automation frees up time for areas that need human oversight, like strategy and creative direction.

- Learning that carries forward: A strong system covers patterns—which hooks, formats, and messages tend to work consistently.

In practice, this is where many teams fall short. They may have a clear testing strategy, but lack the execution layer needed to apply it consistently across live campaigns.

This is exactly the gap Launcher is designed to fill. Launcher sits at the execution layer of creative testing. It doesn’t replace creative strategy or ideation, but it operationalizes them, while teams define what they want to test and how success should be measured.

Launcher standardizes how creatives are launched using templates, validation checks, and a consistent structure. Creative variants are generated in bulk, ad set parameters are defined once, and naming and setup remain consistent—so tests start under comparable conditions rather than ad hoc execution.

After launch, Bïrch Rules can monitor performance across all your active campaigns. Using flexible conditions (including metric comparisons, ranking logic, and nested AND/OR rules), it automatically executes multiple actions, such as pausing underperformers early, scaling high-performing creatives, or adjusting budget.

Updating your creative testing approach

Manual creative testing doesn’t fall short because of effort or creative quality. It breaks down when teams try to scale without the infrastructure needed to run tests consistently and respond to performance changes in time.

As campaigns scale, manual processes struggle to keep pace. Reviews become reactive, decisions lag behind what’s actually happening in the account, and learning slows just as complexity increases.

Improving creative testing means putting the right structure in place—standardizing setup, simplifying analysis, and making it easier to act as performance shifts.

Try Bïrch for free and automate creative testing across campaigns with consistent execution and insight.

FAQs

The advertising industry has gone through significant changes in recent years. Platforms move faster, audiences are unpredictable, and performance signals shift quickly. It’s more important than ever for advertisers to understand how different strategies impact sales so they can make more informed decisions.

And while many factors contribute to a campaign’s overall success, creative testing is still key to performance. According to Nielsen research, creative contributes 47% of ad effectiveness, making it the most important element attributed to sales lift in successful campaigns.

Despite its importance, creative testing is fragile. Without clear structure, it’s hard to scale, easy to misinterpret, and often leads to underperforming ads and missed learning over time.

In this article, we’ll talk in depth about how manual creative testing can hurt ad performance—and how automation can improve testing, insights, and scalability.

Key takeaways

- Manual creative testing struggles at scale because it lacks the structure needed to evaluate performance consistently across campaigns and accounts.

- When infrastructure is missing, creative testing becomes reactive. Decisions lag behind performance changes, and wasted spend increases as issues are caught too late.

- Uneven delivery and manual reviews make fair comparisons difficult, especially as creative volume and account complexity grow.

- Automation turns creative testing into a continuous process, allowing teams to act on performance signals while they still matter.

- Bïrch’s Launcher feature standardizes how creative tests are executed, while Explorer aggregates and analyzes results across campaigns—making it easier to identify patterns and apply learnings consistently.

The real creative testing problem no one talks about: infrastructure

One of the reasons why your creative testing efforts might be failing is the lack of infrastructure behind them. This can make testing inconsistent and difficult to scale.

If you’re only running a few ads with a limited budget, manual creative testing can be effective because you can monitor performance closely and make quick decisions when needed.

However, as campaigns grow, your testing approach may become harder to maintain. Without a clear structure in place, performance data ends up dispersed across disconnected dashboards, reports, and ad accounts. Teams manually pull metrics, compare results at different points in time, and reconcile numbers that were never designed to be evaluated together.

Inconsistency arises from the lack of a shared creative testing framework. Creatives are launched under different conditions, reviewed using different metrics, and evaluated at different stages of delivery.

Without a standard infrastructure, each metric is interpreted in isolation.

And when insights feel shallow or repetitive, it’s often because there’s no system capturing learnings in a way that makes them reusable.

Not having a solid infrastructure makes creative testing expensive. You might see this in wasted media spend. Budget will keep flowing to underperforming creatives if you don’t catch them early enough.

Less visible, but often more costly, is the loss of learning. When results aren’t evaluated consistently, it’s impossible to tell reliably what caused a creative to fail. Was it the message, the format, the audience, or the timing?

In this context, scaling creative output becomes a gamble. Without reliable signals, teams can’t scale with confidence and risk increasing cost without improving results.

Is manual creative testing diminishing your growth?

In many teams, manual creative testing quietly limits growth by slowing decisions and delaying learning.

Creatives are launched across campaigns or ad sets and are left to run for a period of time. Performance is then reviewed using certain metrics, typically CTR, CPA, or ROAS. Based on those results, actions are taken.

This cycle repeats week after week. Teams wait for enough data to form conclusions, review performance after the fact, and make changes once trends are already visible.

Here’s where it gets tricky.

By the time teams take action, a creative may already be losing efficiency or showing early signs of fatigue. At the same time, with manual creative testing, creatives rarely receive delivery evenly. Without proper controls, performance comparisons become unreliable.

As a result, teams end up comparing assets that ran under different conditions—budgets, audiences, timeframes, or placements—which can lead to misleading conclusions, even when the numbers look clear.

Manual testing is also prone to human error. Some fluctuations in performance can influence how a creative is labeled. Over time, this introduces inconsistency into the testing process, especially across different team members or accounts.

This is how manual creative testing starts limiting growth. When testing can’t keep pace with changing performance conditions, decisions become reactive, insights shallow, and scale increasingly difficult to manage with confidence.

Why creative testing needs to be automated

Manual processes simply weren’t designed to handle the volume, speed, and variability of modern paid media. As more creatives are launched across more campaigns, teams need ways to keep up without drowning in manual work.

This is where automation removes friction at execution—standardizing how tests are launched, monitored, and evaluated so teams can keep pace without losing control.

Automation contributes to creative intelligence: Automated creative testing evaluates performance using the same logic every time. Creatives are assessed against consistent criteria, and decisions don’t fluctuate based on who happens to be checking the account that day or how performance was last reviewed.

Time saving and faster learning: Because access to platforms, formats, and audiences is largely the same, the real advantage comes from how quickly teams can learn from performance and apply those insights—something automation makes simpler.

Instead of waiting days to find potential issues, automation can catch performance drops within hours, allowing teams to act while results are still relevant. This leads to:

- Faster detection of meaningful performance changes

- More objective decision-making across teams and accounts

- High-velocity testing

Analysis at scale: With automation, it’s possible to analyze thousands of data points at scale, and identify which perform best. It helps you predict ad fatigue and take timely action.

Automation as an organizational asset: Insights from automated creative testing continue to guide teams long after campaigns end, and even when team members change. Besides informing ad decisions, creative performance data informs messaging, positioning, and broader growth strategies.

Real advertiser experiences: life before and after automation

We’ve asked several leaders to describe how they have automated creative testing and the impacts they have seen.

Stas Slota runs performance marketing agency LeaderPrivate, which focuses on affiliate-driven verticals. He describes a structured but hands-on testing process. His team typically tests 10–15 creatives per campaign, built around a small number of defined hooks.

They monitor performance manually until creative volume increases or campaigns expand across multiple accounts. That’s when automation becomes a necessity.

“We believe automation to be a great solution, especially for testing creatives at scale, because it saves budget by catching underperforming creatives in real time. When you’re running 50–70+ creatives across multiple ad accounts, it becomes physically impossible to quality-track everything manually. Automation allows you to set specific performance thresholds—say, CPC under $0.50, CPM under $50, and cost per lead under $10—and the system alerts you immediately when a creative falls outside those parameters, telling you exactly which creative in which account needs to be paused.”

For Ivan Vislavskiy at Comrade Digital Marketing Agency, the breaking point came as creative testing scaled across multiple clients and verticals.

Before automation, testing meant spreadsheets, screenshots, and long internal discussions about which ads “felt” stronger. But that dynamic changed once creative testing was automated. Wasted spend dropped, learning cycles accelerated, and teams were able to move to data-backed iteration.

“I still remember how messy it was before automation. We’d be buried in spreadsheets, digging through screenshots, jumping on Zoom calls trying to figure out which ad felt better. Everyone had an opinion, and it was just chaos. Now? It’s way smoother. We plug in the data, we see exactly what’s working with heatmaps, and we make decisions in minutes.”

David Hampian has led performance and integrated marketing teams across large organizations, and currently heads up Field Vision. He describes how manual creative testing simply stopped working as environments became faster and more complex.

Earlier in his career, creative testing was largely manual. It involved a limited set of ads, early performance signals, and decisions made in scheduled reviews. While that approach felt safe and controllable, it didn’t scale.

As teams grew, creative testing had to become automated and “always-on.” Instead of campaign-by-campaign reviews, systems were put in place to introduce new creative and surface patterns across performance data.

“Before automation, creative testing was episodic, slow, and heavily meeting-driven. After automation, it became continuous and embedded in how campaigns ran day to day. The biggest difference was not speed alone, but clarity. Automation made it easier to see what was working, apply those learnings to the next round of creative, and turn testing into a repeatable growth muscle instead of a one-off task.”

What automated creative testing looks like in practice

In the automated setup, the workflow starts with creative strategy: deciding what to test (new hooks, messages, formats, visuals).

Using tools like Launcher, teams can generate and launch creative variations at scale while keeping structure intact.

A typical workflow looks like this:

First, creative assets are uploaded in bulk using Google Drive or local files. From there, structured creative variations are generated within a consistent framework, rather than spread across disconnected ad sets or spreadsheets.

Next, campaigns and ad sets are defined once. Ad set parameters are applied across all generated variations, ensuring each creative runs under comparable conditions.

Before launch, the setup is saved as a Launcher template. This creates a repeatable structure that can be reused across future tests, keeping execution consistent over time.

From this point on, the structured foundation makes it much easier and faster for teams to track performance and catch early signals such as underperformance.

Now, let’s consider potential scenarios and how they can impact your campaign outcomes.

Picture this. You launch a new set of creatives, and performance looks strong in the first few days. Engagement is high, costs are stable, and scaling feels safe. As the scale increases, results start to drop, and the creative that has been performing so well starts to lose momentum.

This is creative fatigue, and it’s one of the hardest things to manage manually.

In a manual setup, fatigue is usually identified after the performance has already dropped. By this time, the spend has already been wasted.

However, with automation in place, fatigued creatives can be paused or deprioritized as soon as early signals appear—allowing budget and delivery to shift quickly to fresher, higher-performing assets before waste adds up.

That kind of early protection is powerful on its own, but it’s only part of the picture.

Once testing becomes consistent and reliable, the focus naturally shifts from “which single ad won this round” to something much more powerful: understanding why certain elements work.

Structured testing at scale makes this possible. Instead of seeing which single ad performed best, teams begin to understand why certain messages, hooks, or formats work—and where those patterns repeat.

Those insights don’t have to stay confined to a single ad account. Messaging that performs well in paid social can inform landing pages, email campaigns, or even broader brand positioning. Creative testing stops being channel-specific and starts feeding into the overall marketing strategy.

But to turn consistent testing into repeatable insight, teams need visibility beyond individual ads.

With tools like Bïrch Explorer, those insights can be analyzed across campaigns and accounts, making it easier to identify which themes, formats, or messages consistently drive results and apply those learnings across channels.

How to build a scalable creative testing infrastructure today

A scalable testing system creates rules for how testing happens and ensures decisions are made the same way each time. At a minimum, that means:

- Consistent evaluation logic: Creatives should be judged using the same criteria across campaigns, accounts, and timeframes. Without this, results are impossible to compare, and learnings don’t compound.

- Continuous monitoring: The system should evaluate performance as data comes in and act before inefficiencies grow.

- Automation at the execution layer: It’s challenging for humans to reach the capabilities of automated systems when monitoring performance at scale. Automation frees up time for areas that need human oversight, like strategy and creative direction.

- Learning that carries forward: A strong system covers patterns—which hooks, formats, and messages tend to work consistently.

In practice, this is where many teams fall short. They may have a clear testing strategy, but lack the execution layer needed to apply it consistently across live campaigns.

This is exactly the gap Launcher is designed to fill. Launcher sits at the execution layer of creative testing. It doesn’t replace creative strategy or ideation, but it operationalizes them, while teams define what they want to test and how success should be measured.

Launcher standardizes how creatives are launched using templates, validation checks, and a consistent structure. Creative variants are generated in bulk, ad set parameters are defined once, and naming and setup remain consistent—so tests start under comparable conditions rather than ad hoc execution.

After launch, Bïrch Rules can monitor performance across all your active campaigns. Using flexible conditions (including metric comparisons, ranking logic, and nested AND/OR rules), it automatically executes multiple actions, such as pausing underperformers early, scaling high-performing creatives, or adjusting budget.

Updating your creative testing approach

Manual creative testing doesn’t fall short because of effort or creative quality. It breaks down when teams try to scale without the infrastructure needed to run tests consistently and respond to performance changes in time.

As campaigns scale, manual processes struggle to keep pace. Reviews become reactive, decisions lag behind what’s actually happening in the account, and learning slows just as complexity increases.

Improving creative testing means putting the right structure in place—standardizing setup, simplifying analysis, and making it easier to act as performance shifts.

Try Bïrch for free and automate creative testing across campaigns with consistent execution and insight.

FAQs

Manual testing isn’t outdated, but it doesn’t scale well on its own. As campaign volume and complexity increase, manual processes struggle to keep up with the speed and consistency required. Automation provides the infrastructure to execute and evaluate tests reliably, meaning marketers can focus more on creative strategy and results interpretation.

The main disadvantages of manual testing are slower decision-making, inconsistent evaluation, and limited scalability. As campaign volume increases, manual review becomes harder to maintain, leading to delayed actions, higher risk of error, and weaker learning over time.

Creative testing tools help teams launch, measure, and compare ad variations consistently across campaigns. They reduce reliance on manual reviews and make it easier to identify which creatives, messages, or formats drive results. Tools like Launcher help standardize how creatives are set up and launched, so tests run under comparable conditions. Then, Explorer helps analyze results across campaigns and accounts, making it easier to spot patterns and reuse what works.

A scalable creative testing framework defines how ads are tested, evaluated, and acted on consistently. It combines clear metrics, standardised launch conditions, ongoing monitoring, and a way to retain learnings over time.

Automated creative testing helps marketers evaluate performance consistently, catch issues earlier, and reduce wasted spend—turning creative testing into a scalable, repeatable system.